D3 is a fantastic framework for visualization – besides its beautiful outputs, the framework itself is elegantly designed. However, its elegance and combination of several technologies (javascript + svg + DOM manipulations) make it very hard to learn D3. Luckily, there is a huge range of D3 examples out there. Cargo-Cults to the rescue…

Cargo Cults were the way Melanesians responded when they suddenly were confronted with military forces in the second world war: strangers arrived wearing colorful clothes, marched up and down strips of land and suddenly: parachutes with cargo-crates were dropped. After the strangers left, the islanders were convinced that, if they just behaved like the strangers, cargo would come to them. As the islanders, we don’t have to understand the intricate details of D3 – we just have to perform the right rituals to be able to do the same visualizations…

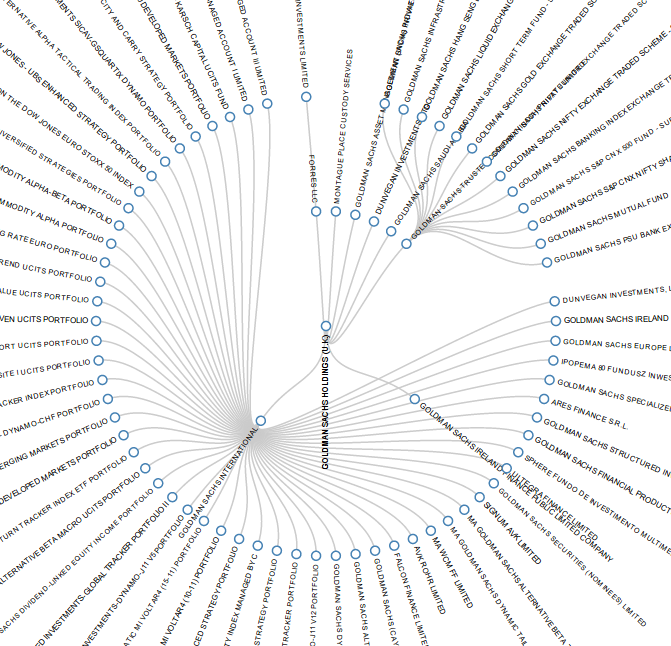

Let’s for example try to re-create a Reingold-Tilford tree of the Goldman Sachs network.

Things I’ll work with:

The Reingold-Tilford tree example

the Goldman Sachs Network over at Opencorporates (you can get the data in their json format)

The first thing I always look at is: how is the data formatted for the example: Most of the examples provide data with it that help you to understand how the data is layed-out for this specific example. This also means: if we bring our data into the same shape, we’ll be able to use our data in the example. So let’s look at the “flare.json” format, that is used in the example. It is a very simple json format where each entry has two attributes: a name and an array of children – this forms the tree for our tree layout. The data we have from Open Corporates looks different, here we always have parent and child – but let’s bring this into the same form.

First, I’ll get the javascript bits I’ll need: d3 and underscore (I like underscore for its functions that make working with data a lot nicer), then I’ll create an HTML page similar to the example. I’ll check in the code where the “flare.json” file is loaded and put my own json in it (the one from opencorporates linked above) (commit note- that changes later on, since opencorporates does not do CORS…).

I then convert the data using a javascript function (in our case a recursive one, that crawls down the tree)

var getChildren = function(r,parent) {

c=_.filter(r,function(x) {return x.parent_name == parent})

if (c.length) {

return ({"name":parent,

children: _.map(c,function(x) {return (getChildren(r,x.child_name))})})

}

else {

return ({"name":parent })

}

}

root=getChildren(root,"GOLDMAN SACHS HOLDINGS (U.K.)")

Finally, I adjust the layout a bit to make space for the labels etc

]]>First we will scrape and collect the data. I will use Python as a programming language – but you could do it in any other programming language. (If you don’t know how to program, don’t worry.) I’ll use Gephi to analyze and visualize the network in the next step. Now let’s see what we can find…

The IATI’s data is collected on their data platform, the IATI registry. This site is mainly links to the data in XML format. The IATI standard is an XML-based standard that took policy and data wonks years to develop – but it’s an accepted standard in the field, and many organizations use it. Python, luckily, has good tools to deal with XML. the IATI registry also has an API, with examples on how to use it. It returns JSON, which is great, since that’s even simpler to deal with than XML. Let’s go!

From the examples, we learn that we can use the following URL to query for activities in a specific country (Kenya in our example):

http://www.iatiregistry.org/api/search/dataset?filetype=activity&country=KE&all_fields=1&limit=200

If you take a close look at the resulting JSON, you will notice it’s an array ([]) of objects ({}) and that each object has a download_url attribute. This is the link to the XML report.

First we’ll need to get all the URLs:

>>> import urllib2, json #import the libraries we need

>>> url="http://www.iatiregistry.org/api/search/" + "datasetfiletype=activity&country=KE&all_fields=1&limit=200"

>>> u=urllib2.urlopen(url)

>>> j=json.load(u)

>>> j['results'][0]

That gives us:

{u'author_email': u'[email protected]',

u'ckan_url': u'http://iatiregistry.org/dataset/afdb-kenya',

u'download_url': u'http://www.afdb.org/fileadmin/uploads/afdb/Documents/Generic-Documents/IATIKenyaData.xml',

u'entity_type': u'package',

u'extras': {u'activity_count': u'5',

u'activity_period-from': u'2010-01-01',

u'activity_period-to': u'2011-12-31',

u'archive_file': u'no',

u'country': u'KE',

u'data_updated': u'2013-06-26 09:44',

u'filetype': u'activity',

u'language': u'en',

u'publisher_country': u'298',

u'publisher_iati_id': u'46002',

u'publisher_organization_type': u'40',

u'publishertype': u'primary_source',

u'secondary_publisher': u'',

u'verified': u'no'},

u'groups': [u'afdb'],

u'id': u'b60a6485-de7e-4b76-88de-4787175373b8',

u'index_id': u'3293116c0629fd243bb2f6d313d4cc7d',

u'indexed_ts': u'2013-07-02T00:49:52.908Z',

u'license': u'OKD Compliant::Other (Attribution)',

u'license_id': u'other-at',

u'metadata_created': u'2013-06-28T13:15:45.913Z',

u'metadata_modified': u'2013-07-02T00:49:52.481Z',

u'name': u'afdb-kenya',

u'notes': u'',

u'res_description': [u''],

u'res_format': [u'IATI-XML'],

u'res_url': [u'http://www.afdb.org/fileadmin/uploads/afdb/Documents/Generic-Documents/IATIKenyaData.xml'],

u'revision_id': u'91f47131-529c-4367-b7cf-21c8b81c2945',

u'site_id': u'iatiregistry.org',

u'state': u'active',

u'title': u'Kenya'}

So j['results'] is our array of result objects, and we want to get the

download_url properties from its members. I’ll do this with a list comprehension.

>>> urls=[i['download_url'] for i in j['results']]

>>> urls[0:3]

[u'http://www.afdb.org/fileadmin/uploads/afdb/Documents/Generic-Documents/IATIKenyaData.xml', u'http://www.ausaid.gov.au/data/Documents/AusAID_IATI_Activities_KE.xml', u'http://www.cafod.org.uk/extra/data/iati/IATIFile_Kenya.xml']

Fantastic. Now we have a list of URLs of XML reports. Some people might say, “Now

you’ve got two problems” – but I think we’re one step further.

We can now go and explore the reports. Let’s start to develop what we want to do

with the first report. To do this we’ll need lxml.etree, the XML library. Let’s use it to parse the XML from the first URL we grabbed.

>>> import lxml.etree

>>> u=urllib2.urlopen(urls[0]) #open the first url

>>> r=lxml.etree.fromstring(u.read()) #parse the XML

>>> r

<Element iati-activities at 0x2964730>

Perfect: we have now parsed the data from the first URL.

To understand what we’ve done, why don’t you open the XML report

in your browser and look at it? Notice that every activity has its own

tag iati-activity and below that a recipient-country tag which tells us which country gets the money.

We’ll want to make sure that we only

include activities in Kenya – some reports contain activities in multiple

countries – and therefore we have to select for this. We’ll do this using the

XPath query language.

>>> rc=r.xpath('//recipient-country[@code="KE"]')

>>> activities=[i.getparent() for i in rc]

>>> activities[0]

<Element iati-activity at 0x2972500>

Now we have an array of activities – a good place to start. If you look closely

at activities, there are several participating-org entries with different

roles. The roles we’re interested in are “Funding” and “Implementing”: who

gives money and who receives money. We don’t care right now about amounts.

>>> funders=activities[0].xpath('./participating-org[@role="Funding"]')

>>> implementers=activities[0].xpath('./participating-org[@role="Implementing"]')

>>> print funders[0].text

>>> print implementers[0].text

Special Relief Funds

WORLD FOOD PROGRAM - WFP - KENYA OFFICE

Ok, now let’s group them together, funders first, implementers later. We’ll do

this with a list comprehension again.

>>> e=[[(j.text,i.text) for i in implementers] for j in funders]

>>> e

[[('Special Relief Funds', 'WORLD FOOD PROGRAM - WFP - KENYA OFFICE')]]

Hmm. There’s one bracket too many. I’ll remove it with a reduce…

>>> e=reduce(lambda x,y: x+y,e,[])

>>> e

[('Special Relief Funds', 'WORLD FOOD PROGRAM - WFP - KENYA OFFICE')]

Now we’re talking. Because we’ll do this for each activity in each report, we’ll

put it into a function.

>>> def extract_funders_implementers(a):

... f=a.xpath('./participating-org[@role="Funding"]')

... i=a.xpath('./participating-org[@role="Implementing"]')

... e=[[(j.text,k.text) for k in i] for j in f]

... return reduce(lambda x,y: x+y,e,[])

...

>>> extract_funders_implementers(activities[0])</code>

[('Special Relief Funds', 'WORLD FOOD PROGRAM - WFP - KENYA OFFICE')]

Now we can do this for all the activities!

>>> fis=[extract_funders_implementers(i) for i in activities]

>>> fis

[[('Special Relief Funds', 'WORLD FOOD PROGRAM - WFP - KENYA OFFICE')], [('African Development Fund', 'KENYA NATIONAL HIGHWAY AUTHORITY')], [('African Development Fund', 'MINISTRY OF WATER DEVELOPMENT')], [('African Development Fund', 'MINISTRY OF WATER DEVELOPMENT')], [('African Development Fund', 'KENYA ELECTRICITY TRANSMISSION CO. LTD')]]

Yiihaaa! But we have more than one report, so we’ll need to create a

function here as well to do this for each report….

>>> def process_report(report_url):

... try:

... u=urllib2.urlopen(report_url)

... r=lxml.etree.fromstring(u.read())

... except urllib2.HTTPError:

... return [] # return an empty array if something goes wrong

... activities=[i.getparent() for i in </code>

... r.xpath('//recipient-country[@code="KE"]')]</code>

... return reduce(lambda x,y: x+y,[extract_funders_implementers(i) for i in activities],[])

...

>>> process_report(urls[0])

Works great – notice how I removed the additional brackets with using another

reduce? Now guess what? We can do this for all the reports! – ready? Go!

>>> fis=[process_report(i) for i in urls]

>>> fis=reduce(lambda x,y: x+y, fis, [])

>>> fis[0:10]

[('Special Relief Funds', 'WORLD FOOD PROGRAM - WFP - KENYA OFFICE'), ('African Development Fund', 'KENYA NATIONAL HIGHWAY AUTHORITY'), ('African Development Fund', 'MINISTRY OF WATER DEVELOPMENT'), ('African Development Fund', 'MINISTRY OF WATER DEVELOPMENT'), ('African Development Fund', 'KENYA ELECTRICITY TRANSMISSION CO. LTD'), ('CAFOD', 'St. Francis Community Development Programme'), ('CAFOD', 'Catholic Diocese of Marsabit'), ('CAFOD', 'Assumption Sisters of Nairobi'), ('CAFOD', "Amani People's Theatre"), ('CAFOD', 'Resources Oriented Development Initiatives (RODI)')]

Good! Now let’s save this as a CSV:

>>> import csv

>>> f=open("kenya-funders.csv","wb")

>>> w=csv.writer(f)

>>> w.writerow(("Funder","Implementer"))

>>> for i in fis:

... w.writerow([j.encode("utf-8") if j else "None" for j in i])

...

>>> f.close()

Now we can clean this up using Refine and examine it with Gephi in part II.

]]>