Mobile data collection

Joachim Mangilima - December 16, 2014 in Skillhare, Tech

This blog post is based on the School of Data skillshare I hosted on mobile data collection. Thanks to everyone who took part in it!

Of recent, mobile has become an increasingly popular method of data collection. This is achieved through having an application or electronic form on a mobile device such as a smartphone or a tablet. These devices offer innovative ways to gather data regardless of time and location of the respondent.

The benefits of mobile data collection are obvious, such as quicker response times and the possibility to reach previously hard-to-reach target groups. In this blog post I share some of the tools that I have been using and developing applications on top of for the past five years.

- Open Data Kit

Open Data Kit (ODK) is a free and open-source set of tools which help researchers author, field, and manage mobile data collection solutions. ODK provides an out-of-the-box solution for users to:

- Build a data collection form or survey ;

- Collect the data on a mobile device and send it to a server; and

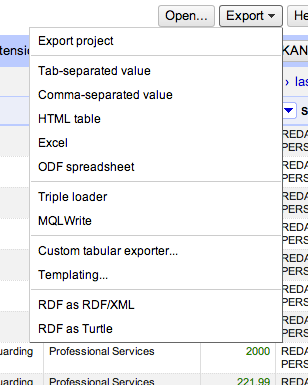

- Aggregate the collected data on a server and extract it in useful formats.

ODK allows data collection using mobile devices and data submission to an online server, even without an Internet connection or mobile carrier service at the time of data collection.

ODK, which uses the Android platform, supports a wide variety of questions in the electronic forms such as text, number, location, audio, video, image and barcodes.

- Commcare

Commcare is an open-source mobile platform designed for data collection, client management, decision support, and behavior change communication. Commcare consists of two main technology components: Commcare Mobile and CommCareHQ.

The mobile application is used by client-facing community health workers/enumerator in visits as a data collection and educational tool and includes optional audio, image, and audio, GPS locations and video prompts. Users access the application-building platform through the website CommCareHQ which is operated on a cloud-based server.

Commcare supports J2ME feature phones, Android phones, and Android tablets and can capture photos and GPS readings, Commcare supports multi-languages and non-roman character scripts as well as the integration of multimedia (image, audio, and video).

CommCare mobile versions allow applications to run offline and collected data can be transmitted to CommCareHQ when wireless (GPRS) or Internet (WI-FI) connectivity becomes available.

- GEOODK

GeoODK provides a way to collect and store geo-referenced information, along with a suite of tools to visualize, analyze and manipulate ground data for specific needs. It enables an understanding of the data for decision-making, research, business, disaster management, agriculture and more.

It is based on the Open Data Kit (ODK), but has been extended with offline/online mapping functionalities, the ability to have custom map layer, as well as new spatial widgets, for collecting point, polygon and GPS tracing functionality.

This one blog post cannot cover each and every tool for mobile data collection, but some other tools that can be used to accomplish mobile data collection each of which having their own unique features includes OpenXData and Episurveyor.

Why Use Mobile Technology in Collecting Data

There are several advantages as to why mobile technology should be used in collecting data some of which include,

- harder skipping questions,

- immediate (real time) access to the data from the server, which also makes data aggregation and analysis to become very rapid,

- Minimizes workforce and hence reduces cost of data collection by cutting out data entry personnel.

- Data Security is enhanced through data encryption

- Collect unlimited data types such as audio, video, barcodes, GPS locations

- Increase productivity by skipping data entry middle man

· Save cost related to printing, storage and management of documents associated with paper based data collection.