Breaking the Knowledge Barrier: The #OpenData Party in Northern Nigeria

olubabayemi - October 1, 2014 in Community, Data Expeditions, Data for CSOs, Events, Follow the Money, Geocoding, Mapping, Spreadsheets, Storytelling, Uncategorized, Visualisation

If the only news you have been watching or listening to about Northern Nigeria is of the Boko Haram violence in that region of Nigeria, then you need to know that other news exist, like the non-government organizations and media, that are interested in using the state and federal government budget data in monitoring service delivery, and making sure funds promised by government reach the community it was meant for.

This time around, the #OpenData party moved from the Nigeria Capital – Abuja to Gusau, Zamfara and was held at the Zamfara Zakat and Endowment Board Hall between September Thursday, 25 and Friday, 26, 2014. With 40 participant all set for this budget data expedition, participants included the state Budget Monitoring Group (A coalition of NGOs in Zamfara) coordinated by the DFID (Development for International Development) State Accountability and Voice Initiative (SAVI),other international NGOs such as Society for Family Health (SFH), Save the Children, amongst others.

But how do you teach data and its use in a less-technology savvy region? We had to de-mystify teaching data to this community, by engaging in traditional visualization and scraping – which means the use of paper artworks in visualizing the data we already made available on the Education Budget Tracker. “I never believed we could visualize the education budget data of the federal government as easy as what was on the wall” exclaimed Ahmed Ibrahim of SAVI

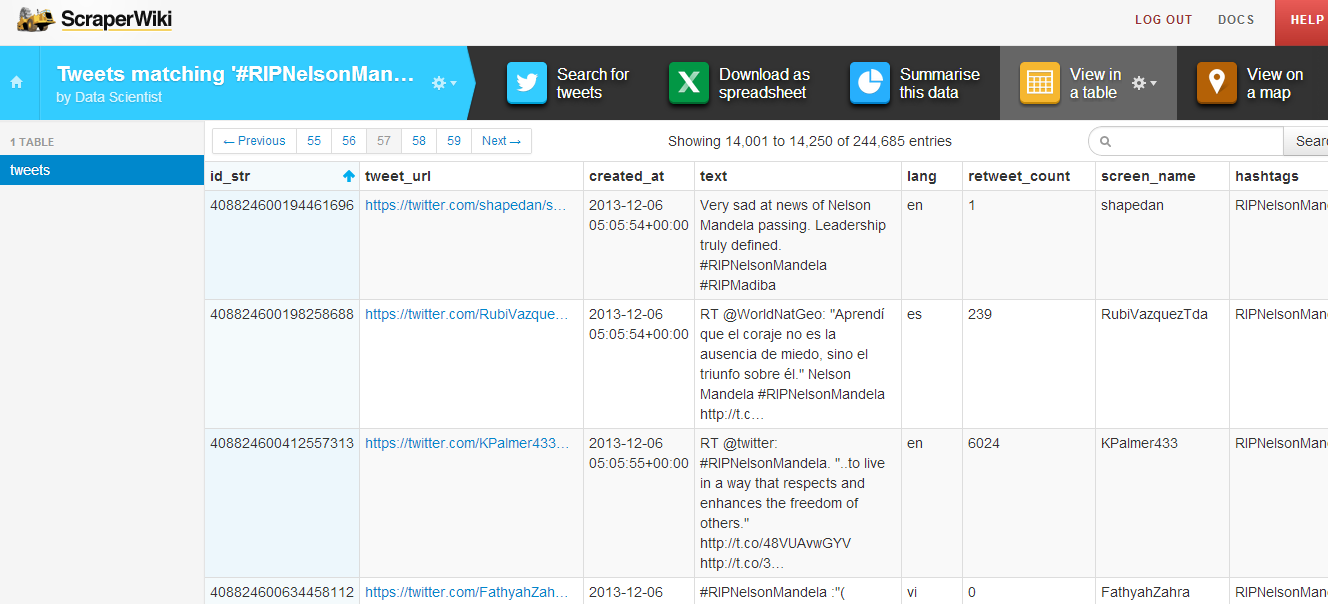

As budgets have become a holy grail especially with state government in Nigeria, of most importance to the participants on the first day, was how to find budget data, and processes involved in tracking if services were really delivered, as promised in the budget. Finding the budget data of the state has been a little bit hectic, but with much advocacy, the government has been able to release dataset on the education and health sector. So what have been the challenges of the NGOs in tracking or using this data, as they have been engaged in budget tracking for a while now?

“Well, it is important to note that getting the government to release the data took us some time and rigorous advocacy, added to the fact that we ourselves needed training on analysis, and telling stories out of the budget data” explained Joels Terks Abaver of the Christian Association of Non Indigenes. During one of the break out session, access to budget information and training on how to use this budget data became a prominent challenge in the resolution of the several groups.

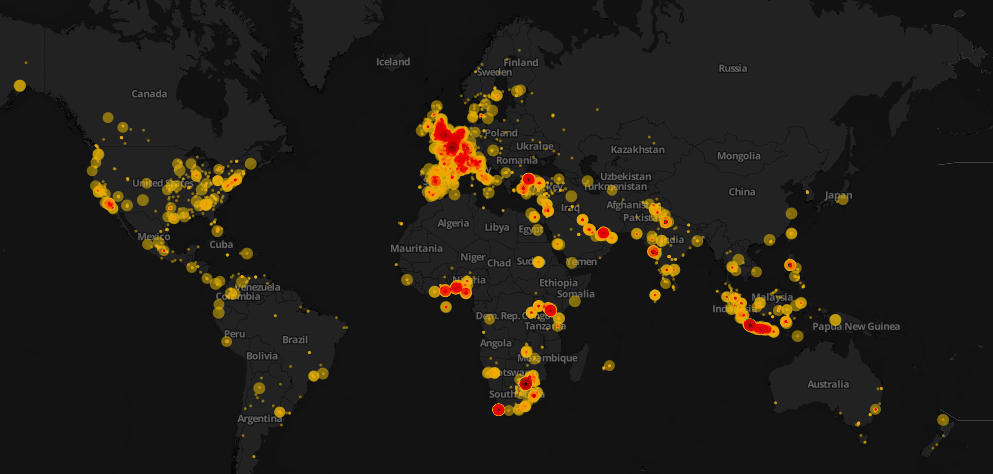

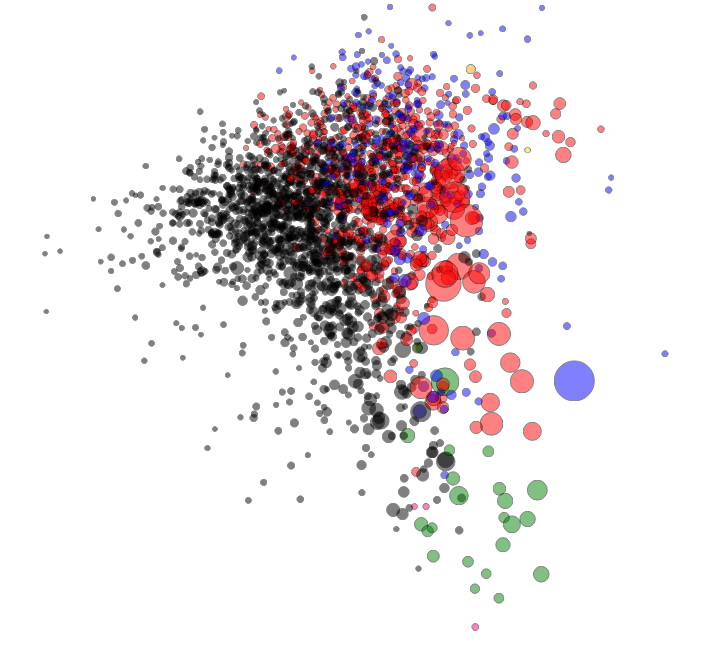

The second day took participants through the data pipelines, while running an expedition on the available education and health sector budget data that was presented on the first day. Alas! We found out a big challenge on this budget data – it was not location specific! How does one track a budget data that does not answer the question of where? When involved in budget tracking, it is important to have a description data that states where exactly the funds will go. An example is Construction of Borehole water pump in Kaura Namoda LGA Primary School, or we include the budget of Kaura Namoda LGA Primary School as a subtitle in the budget document.

Taking participants through the data pipelines and how it relates to the Monitoring and Evaluation System

In communities like this, it is important to note that soft skills are needed to be taught – , like having 80% of the participants not knowing why excel spreadsheets are been used for budget data; like 70% of participants not knowing there is a Google spreadsheet that works like Microsoft Excel; like all participants not even knowing where to get the Nigeria Budget data and not knowing what Open Data means. Well moving through the school of data through the Open Data Party in this part of the world, as changed that notion.”It was an interesting and educative 2-day event taking us through the budget cycle and how budget data relates to tracking” Babangida Ummar, the Chairman of the Budget Working Group said.

Going forward, this group of NGO and journalist has decided to join trusted sources that will be monitoring service delivery of four education institutions in the state, using the Education Budget Tracker. It was an exciting 2-day as we now hope to have a monthly engagement with this working group, as a renewed effort in ensuring service delivery in the education sector. Wondering where the next data party will happen? We are going to the South – South of Nigeria in the month of October – Calabar to be precise, and on the last day of the month, we will be rocking Abuja!